The Nutanix Cloud Bible

The purpose of The Nutanix Cloud Bible is to provide in-depth technical information about the Nutanix platform architecture and how it can enable smooth operations across cloud, edge, and core environments.

» Download this section as PDF (opens in a new tab/window)

The Nutanix Volumes feature (previously know as Acropolis Volumes) exposes back-end DSF storage to external consumers (guest OS, physical hosts, containers, etc.) via iSCSI.

This allows any operating system to access DSF and leverage its storage capabilities. In this deployment scenario, the OS is talking directly to Nutanix bypassing any hypervisor.

Core use-cases for Volumes:

The solution is iSCSI spec compliant, the qualified operating systems are just those of which have been validated by QA.

The following entities compose Volumes:

NOTE: On the backend, a VG’s disk is just a vDisk on DSF.

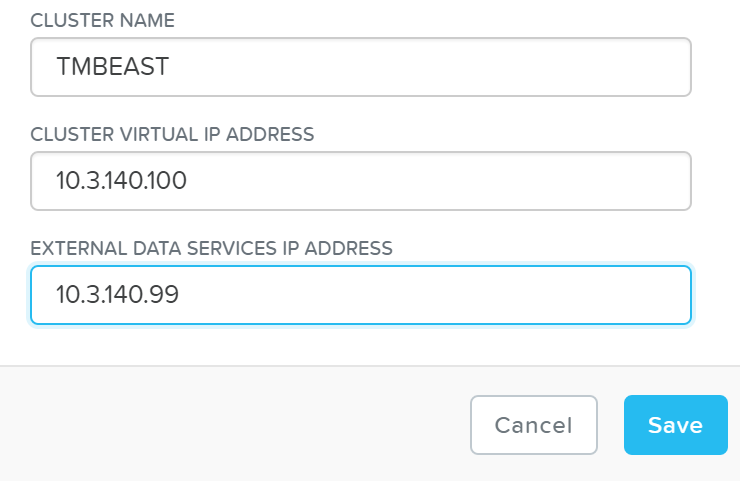

Before we get to configuration, we need to configure the Data Services IP which will act as our central discovery / login portal.

We’ll set this on the ‘Cluster Details’ page (Gear Icon -> Cluster Details):

Volumes - Data Services IP

Volumes - Data Services IP

This can also be set via NCLI / API:

ncli cluster edit-params external-data- services-ip-address=DATASERVICESIPADDRESS

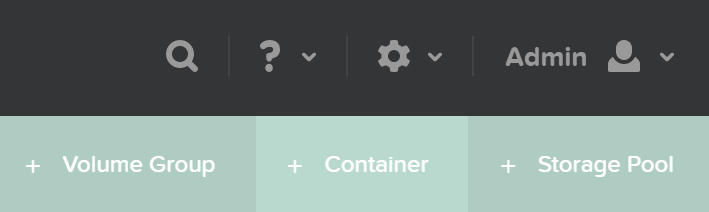

To use Volumes, the first thing we’ll do is create a ‘Volume Group’ which is the iSCSI target.

From the ‘Storage’ page click on ‘+ Volume Group’ on the right hand corner:

Volumes - Add Volume Group

Volumes - Add Volume Group

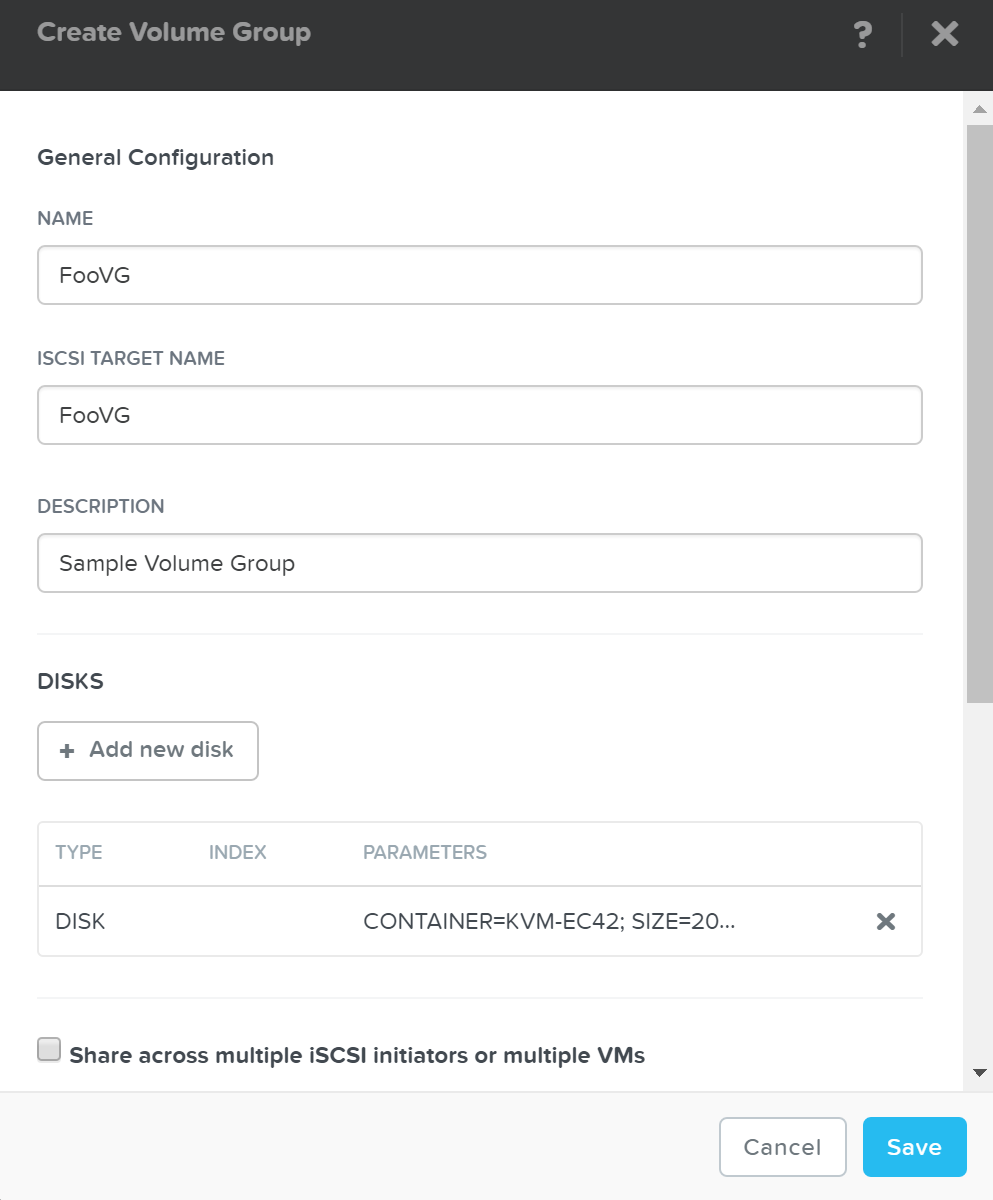

This will launch a menu where we’ll specify the VG details:

Volumes - Add VG Details

Volumes - Add VG Details

Next we’ll click on ‘+ Add new disk’ to add any disk(s) to the target (visible as LUNs):

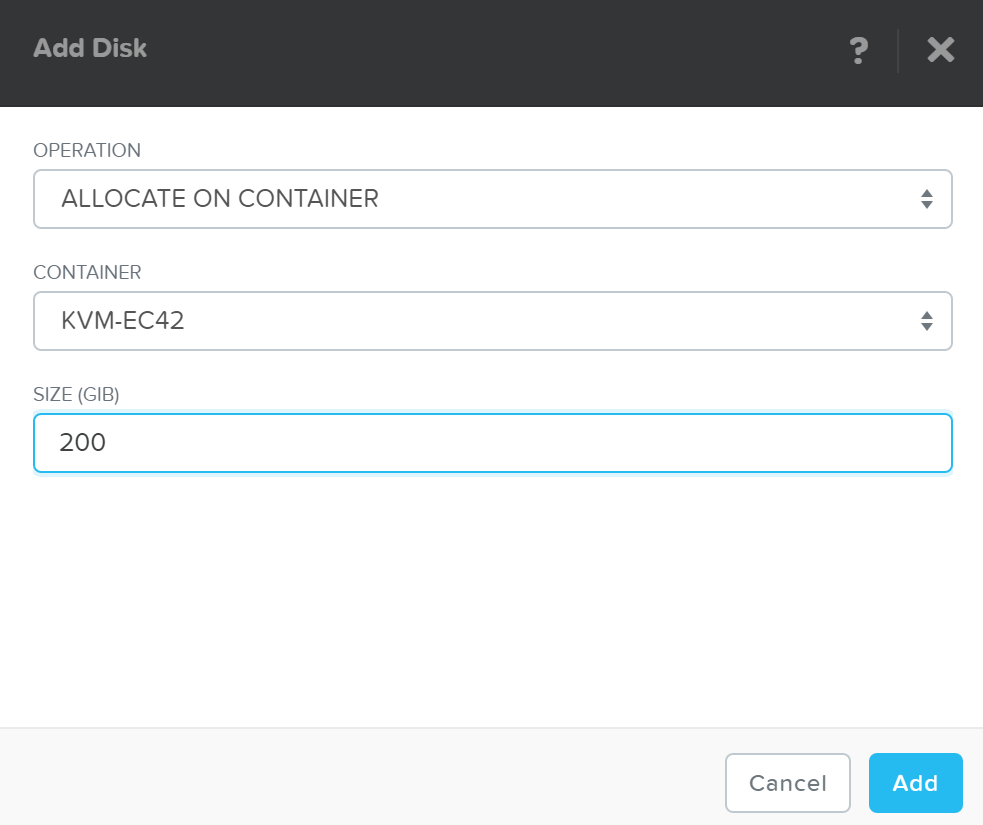

A menu will appear allowing us to select the target container and size of the disk:

Volumes - Add Disk

Volumes - Add Disk

Click ‘Add’ and repeat this for however many disks you’d like to add.

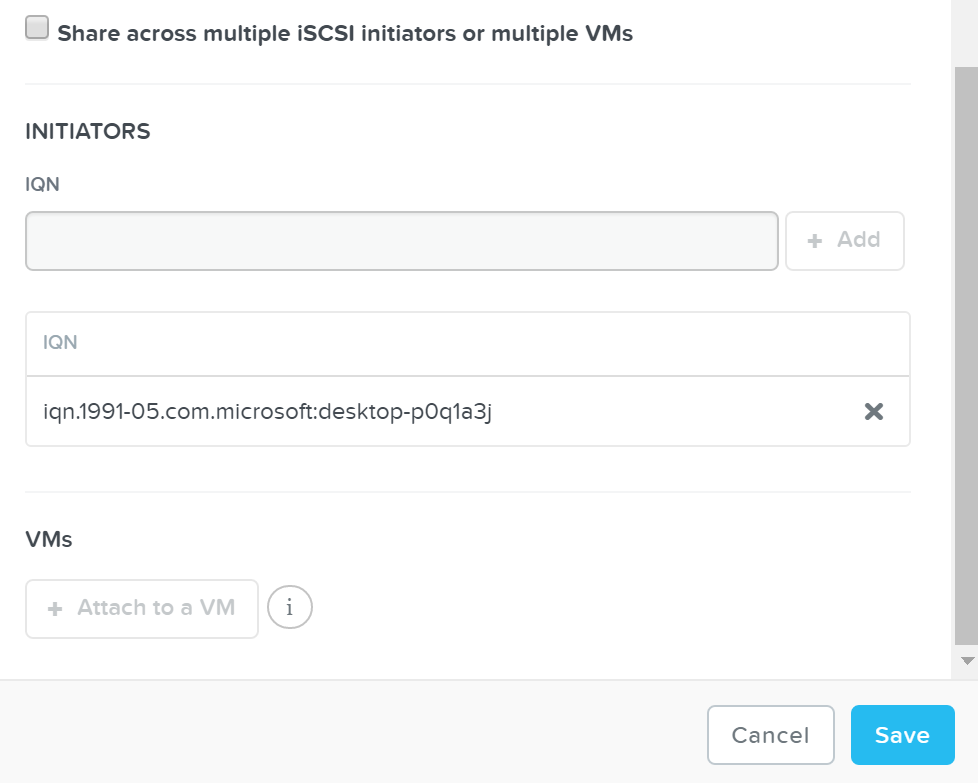

Once we’ve specified the details and added disk(s) we’ll attach the Volume Group to a VM or Initiator IQN. This will allow the VM to access the iSCSI target (requests from an unknown initiator are rejected):

Volumes - Initiator IQN / VM

Volumes - Initiator IQN / VM

Click ‘Save’ and the Volume Group configuration is complete!

This can all be done via ACLI / API as well:

vg.create VGName

Vg.disk_create VGName container=CTRName create_size=Disk size, e.g. 500G

Vg.attach_external VGName InitiatorIQN

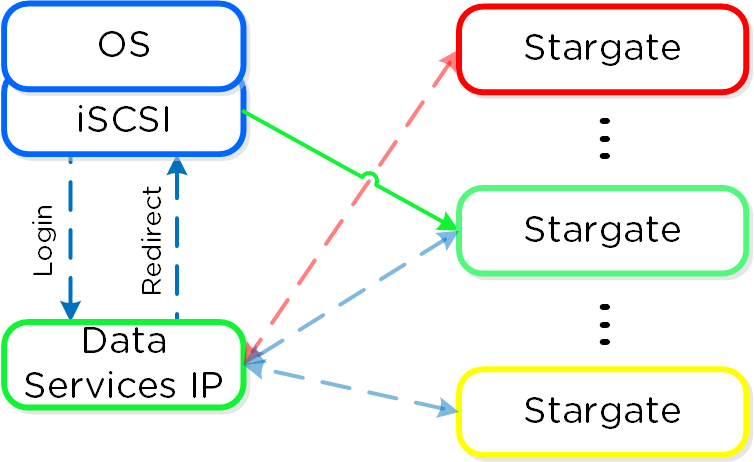

As mentioned previously, the Data Services IP is leveraged for discovery. This allows for a single address that can be leveraged without the need of knowing individual CVM IP addresses.

The Data Services IP will be assigned to the current iSCSI leader. In the event that fails, a new iSCSI leader will become elected and assigned the Data Services IP. This ensures the discovery portal will always remain available.

The iSCSI initiator is configured with the Data Services IP as the iSCSI target portal. Upon a login request, the platform will perform an iSCSI login redirect to a healthy Stargate.

Volumes - Login Redirect

Volumes - Login Redirect

In the event where the active (affined) Stargate goes down, the initiator retries the iSCSI login to the Data Services IP, which will then redirect to another healthy Stargate.

Volumes - Failure Handling

Volumes - Failure Handling

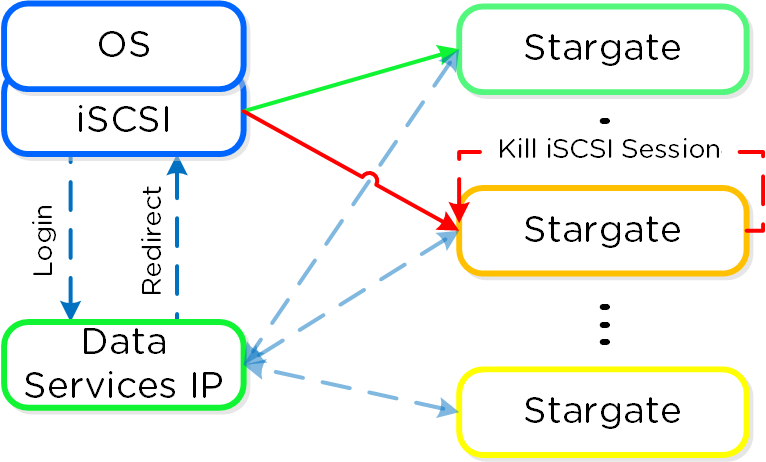

If the affined Stargate comes back up and is stable, the currently active Stargate will quiesce I/O and kill the active iSCSI session(s). When the initiator re-attempts the iSCSI login, the Data Services IP will redirect it to the affined Stargate.

Volumes - Failback

Volumes - Failback

Stargate health is monitored using Zookeeper for Volumes, using the exact same mechanism as DSF.

For failback, the default interval is 120 seconds. This means once the affined Stargate is healthy for 2 or more minutes, we will quiesce and close the session. Forcing another login back to the affined Stargate.

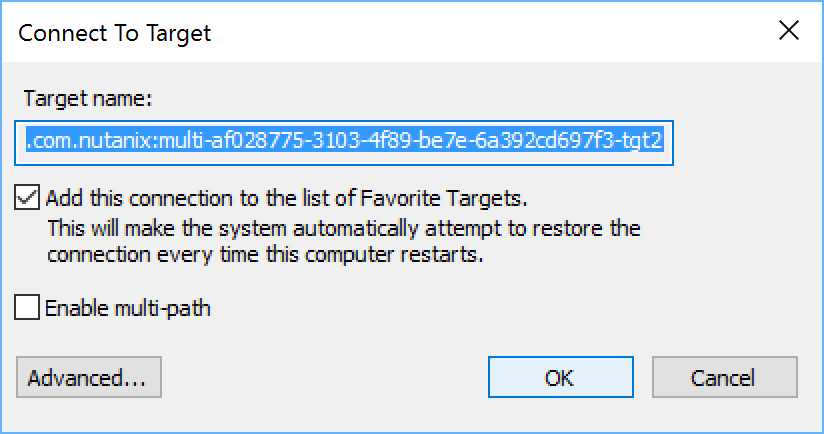

Given this mechanism, client side multipathing (MPIO) is no longer necessary for path HA. When connecting to a target, there’s now no need to check ‘Enable multi-path’ (which enables MPIO):

Volumes - No MPIO

Volumes - No MPIO

The iSCSI protocol spec mandates a single iSCSI session (TCP connection) per target, between initiator and target. This means there is a 1:1 relationship between a Stargate and a target.

As of 4.7, 32 (default) virtual targets will be automatically created per attached initiator and assigned to each disk device added to the volume group (VG). This provides an iSCSI target per disk device. Previously this would have been handled by creating multiple VGs with a single disk each.

When looking at the VG details in ACLI/API you can see the 32 virtual targets created for each attachment:

attachment_list {

external_initiator_name: "iqn.1991-05.com.microsoft:desktop-foo"

target_params {

num_virtual_targets: 32

}

}

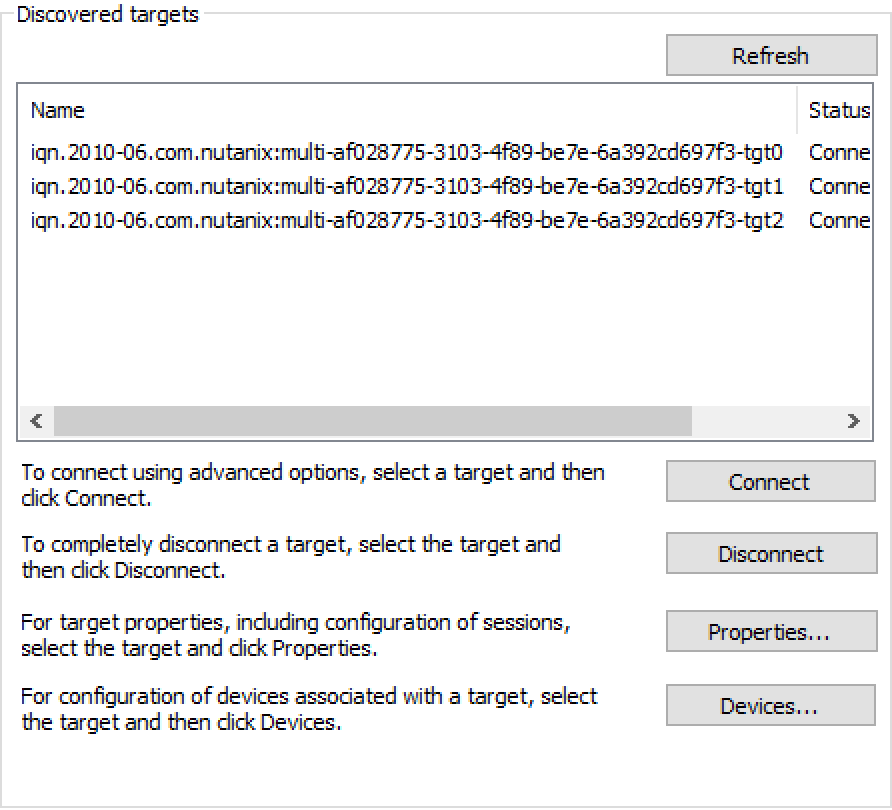

Here we’ve created a sample VG with 3 disks devices added to it. When performing a discovery on my client we can see an individual target for each disk device (with a suffix in the format of ‘-tgt[int]’):

Volumes - Virtual Target

Volumes - Virtual Target

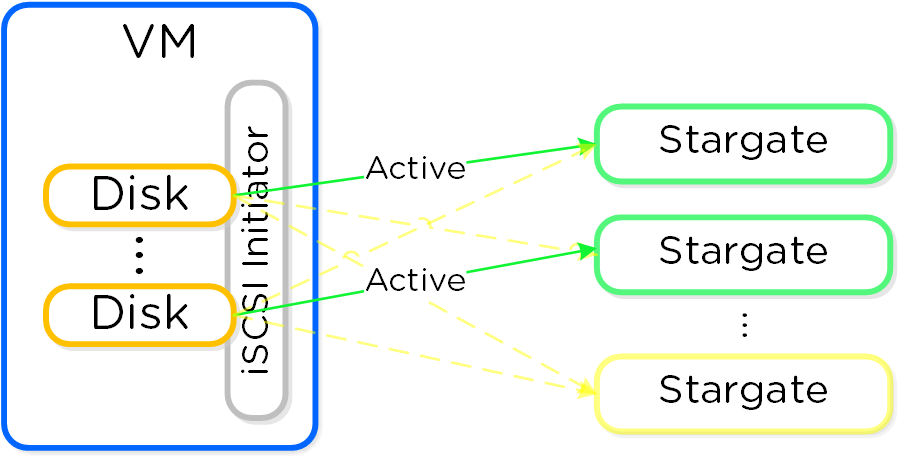

This allows each disk device to have its own iSCSI session and the ability for these sessions to be hosted across multiple Stargates, increasing scalability and performance:

Volumes - Multi-Path

Volumes - Multi-Path

Load balancing occurs during iSCSI session establishment (iSCSI login), for each target.

You can view the active Stargate(s) hosting the virtual target(s) with the following command (will display CVM IP for hosting Stargate):

# Windows Get-NetTCPConnection -State Established -RemotePort 3205 # Linux iscsiadm -m session -P 1

As of 4.7 a simple hash function is used to distribute targets across cluster nodes. In 5.0 this is integrated with the Dynamic Scheduler which will re-balance sessions if necesary. We will continue to look at the algorithm and optimize as necessary. It is also possible to set a preferred node which will be used as long as it is in a healthy state.

Volumes supports the SCSI UNMAP (TRIM) command in the SCSI T10 specification. This command is used to reclaim space from deleted blocks.

©2025 Nutanix, Inc. All rights reserved. Nutanix, the Nutanix logo and all Nutanix product and service names mentioned are registered trademarks or trademarks of Nutanix, Inc. in the United States and other countries. All other brand names mentioned are for identification purposes only and may be the trademarks of their respective holder(s).